js-howto

BEST-PRACTICE: js parse float to int

ref:

TODO: prettier plugin of decorators

This experimental syntax requires enabling one of the following parser plugin(s): 'decorators-legacy, decorators' (9:0)

jsdoc

ref:

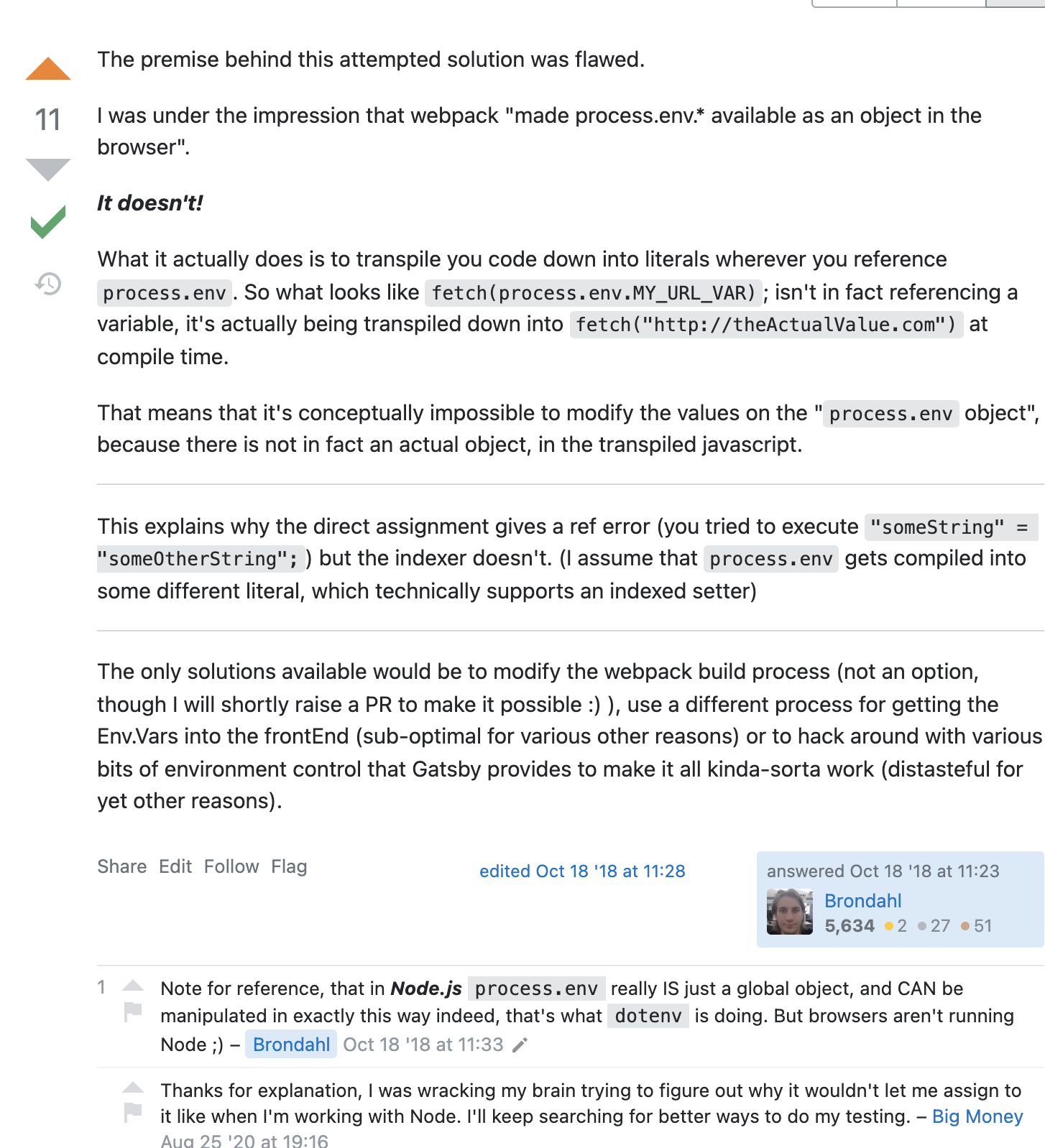

modify process.env

Here explained why the variable cannot change since it has been compiled into a const string. environment variables - Can't set values on process.env in client-side Javascript - Stack Overflow

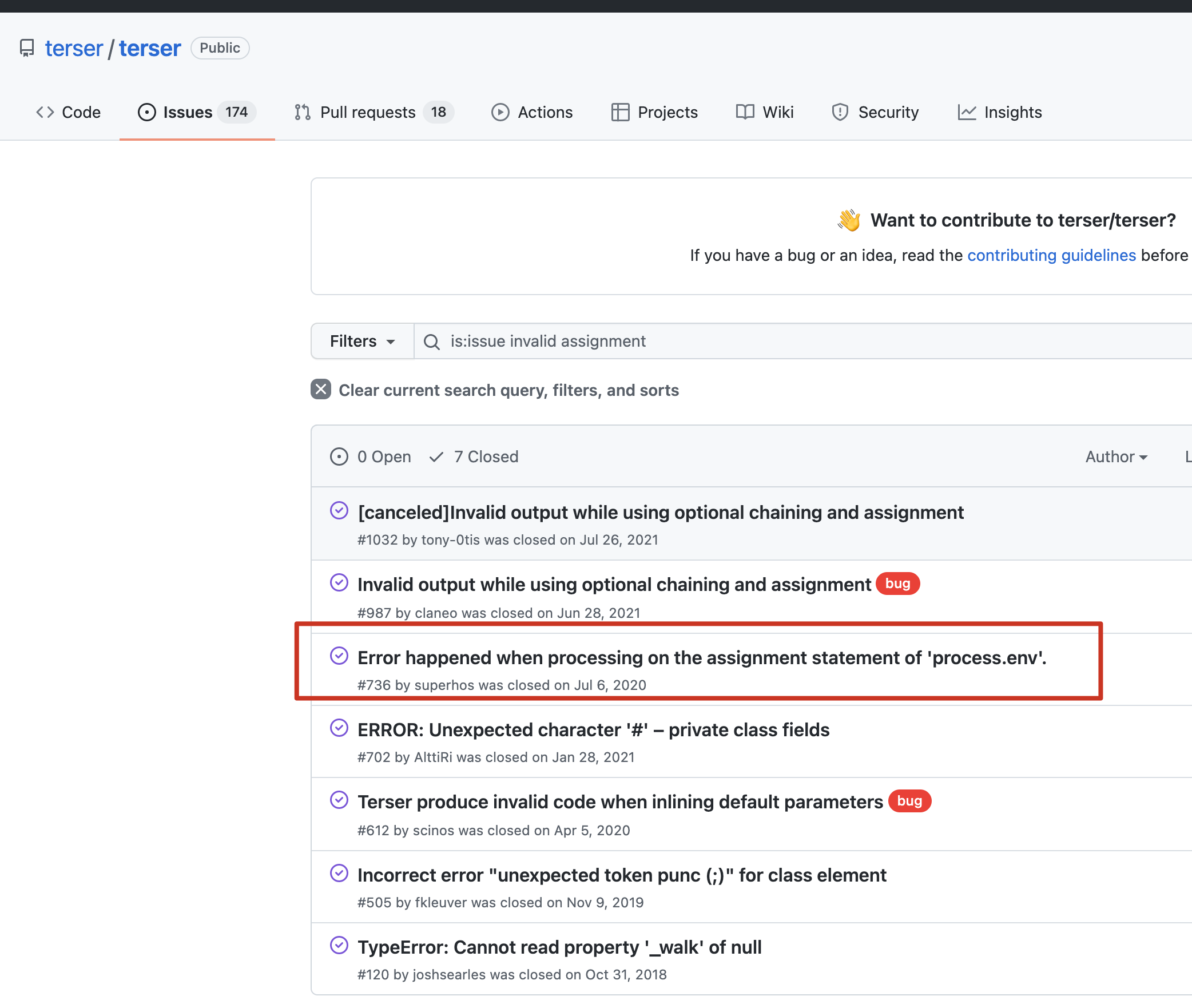

And here's also the same problem but caused terser plugin not work: Error happened when processing on the assignment statement of 'process.env'. · Issue #736 · terser/terser

And I finally found one superb solution:

So that:

// .erb/configs/webpack.config.main.prod.ts:75

new webpack.DefinePlugin({

VAR_ENV: 'process.env',

}),

Then I can use like the following, in which I can change process.env into dbUrl, and passed the compile time check.

VAR_ENV.DATABASE_URL = dbUrl;

node exec sub commands

I got to the conclusion that I should choose exec instead of execFile for a more universal use.

The core usage may be:

const {exec} = require('child_process');

exec('cat *.js missing_file | wc -l', (error, stdout, stderr) => {

if (error) {

console.error(`exec error: ${error}`);

return;

}

console.log(`stdout: ${stdout}`);

console.error(`stderr: ${stderr}`);

});

ref:

webpack

ref:

A good article thoroughly talked about what's webpack and how to use it.

receive arg from command line [Standard Method (no library)]

# the first arg

process.env.argv[2]

The arguments are stored in process.argv

Here are the node docs on handling command line args:

process.argv is an array containing the command line arguments. The first element will be 'node', the second element will be the name of the JavaScript file. The next elements will be any additional command line arguments.

// print process.argv

process.argv.forEach(function (val, index, array) {

console.log(index + ': ' + val);

});

node process-2.js one two=three four

This would generate:

- node

- /Users/mjr/work/node/process-2.js (the file)

- one (the first argv)

- two=three

- four

ref:

change string to number [DON'T]

The discussion suggests me not to change a string to number.

ref:

Angular typescript convert string to number - Stack Overflow

angular - How to properly change a variable's type in TypeScript? - Stack Overflow

best practice to use Exceptions

catch error

function handleError() {

try {

throw new RangeError();

} catch (e) {

switch (e.constructor) {

case Error:

return console.log('generic');

case RangeError:

return console.log('range');

default:

return console.log('unknown');

}

}

}

handleError();

extend error

class MyError extends Error {

constructor(message) {

super(message);

this.name = 'MyError';

}

}

ref:

[HANDBOOK] fs read file, async and part

TODO: From my point of view, the fs.createReadStream is a ???

ref:

node.js - How to read file with async/await properly? - Stack Overflow

javascript - node.js/ read 100 first bytes of a file - Stack Overflow

javascript - how to use async/await with fs.createReadStream in node js - Stack Overflow

javascript - Why does Node.js' fs.readFile() return a buffer instead of string? - Stack Overflow

csv read and parse

modify header

buffer padding algorithm Node.js buffer with padding (right justified fill) example

const buf = Buffer.alloc(32);

const a = Buffer.from('1234', 'hex');

a.copy(buf, buf.length - a.length);

console.log(buf.toString('hex')); // 0000000000000000000000000000000000000000000000000000000000001234

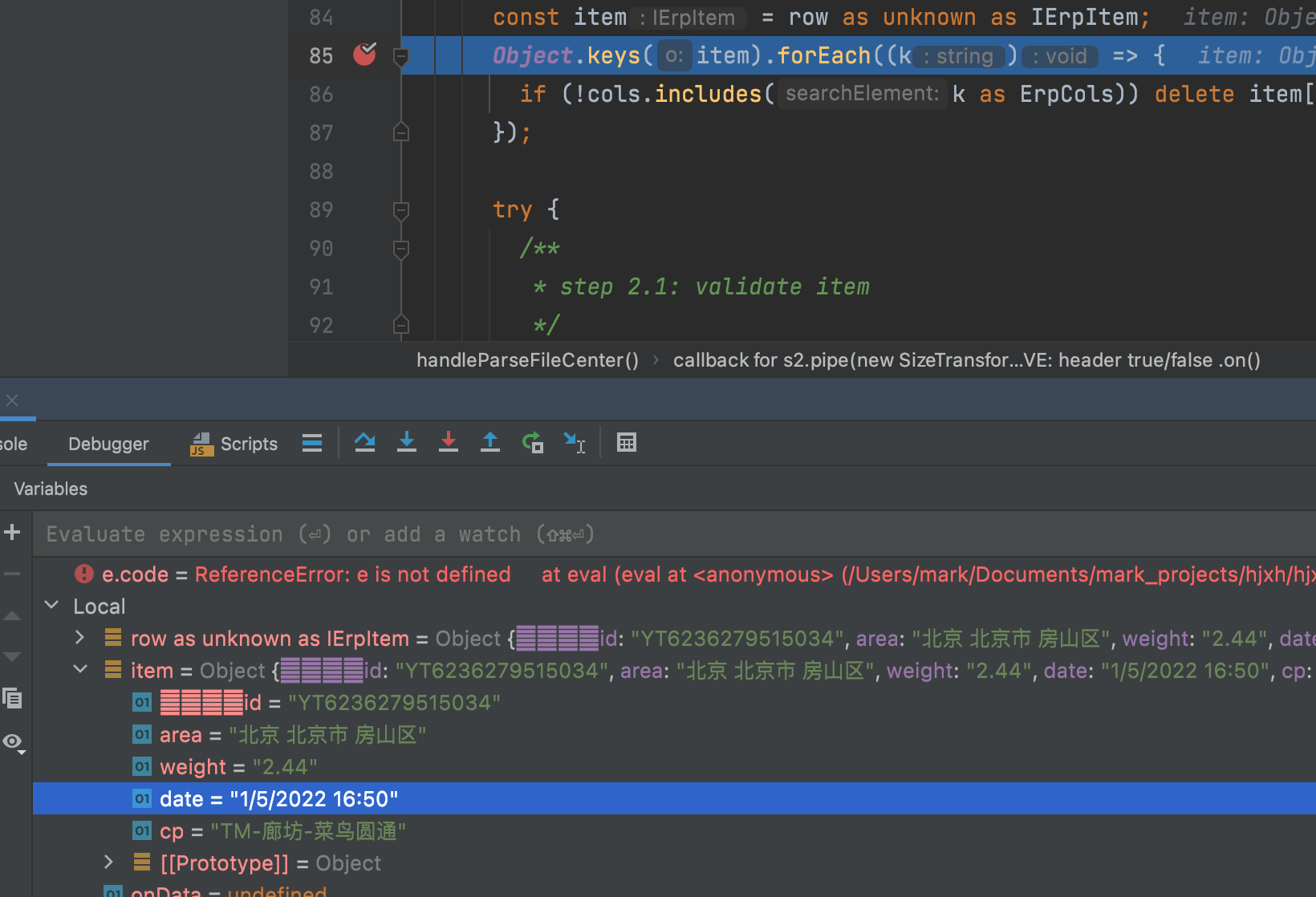

After I did a hard modification, a new problem arises from, i.e. blank placeholder (update: it's zero).

And the solution is replacing there blanks, ref: node.js - Removing a null character from a string in javascript - Stack Overflow

// 1.

s.replace(/\0/g, '');

// 2.

s.replaceAll('\0', '');

Then I need to use this transform into fast-csv:

// src/main/modules/parseFile/center.ts:78

.pipe(

csv.parse({

headers: (headers) => headers.map((h) => h?.replace(/\0/g, '')),

})

) // PR: header true/false; ref: https://stackoverflow.com/a/22809513/9422455

However, I thought this a little not elegant, so I came up a new idea:

// src/main/modules/parseFile/handler/checkCsvEncoding.ts:85

const bufToWrite = Buffer.alloc(L);

const tempBuf = iconv.encode(s, encoding);

const offset = L - tempBuf.length;

tempBuf.copy(bufToWrite, offset);

fs.write(fd, bufToWrite, 0, L, 0, (err) => {

// prettier-ignore

return !err? resolve({

useIconv: encoding === ValidEncoding.gbk,

offset

}) : reject(new GenericError(errorModifyingHeader, `replace error: ${err}, string: ${s}, bufToWrite: ${bufToWrite}`));

});

I saved the offset, so that when I open this file again, I can use this offset quickly located the real start without \0.

And it did work!

then, what about repeatability?

After a battle of mind, I gave up with the wonderful write back idea, since it would destroy the raw data and inflexible or costly to re-use the sheet.

I then do not modify the data, but to use header map:

// src/main/modules/parseFile/center.ts:72

const progress = new ParsingProgress();

const headers = (headers) =>

headers.map((header) => (header === SIGNAL_ID ? COL_ID : header));

s2.pipe(

new SizeTransformer(fs.statSync(fp).size, (pct) =>

progress.updateSizePct(pct),

),

).pipe(csv.parse({headers})); // PR: header true/false; ref: https://stackoverflow.com/a/22809513/**9422455**

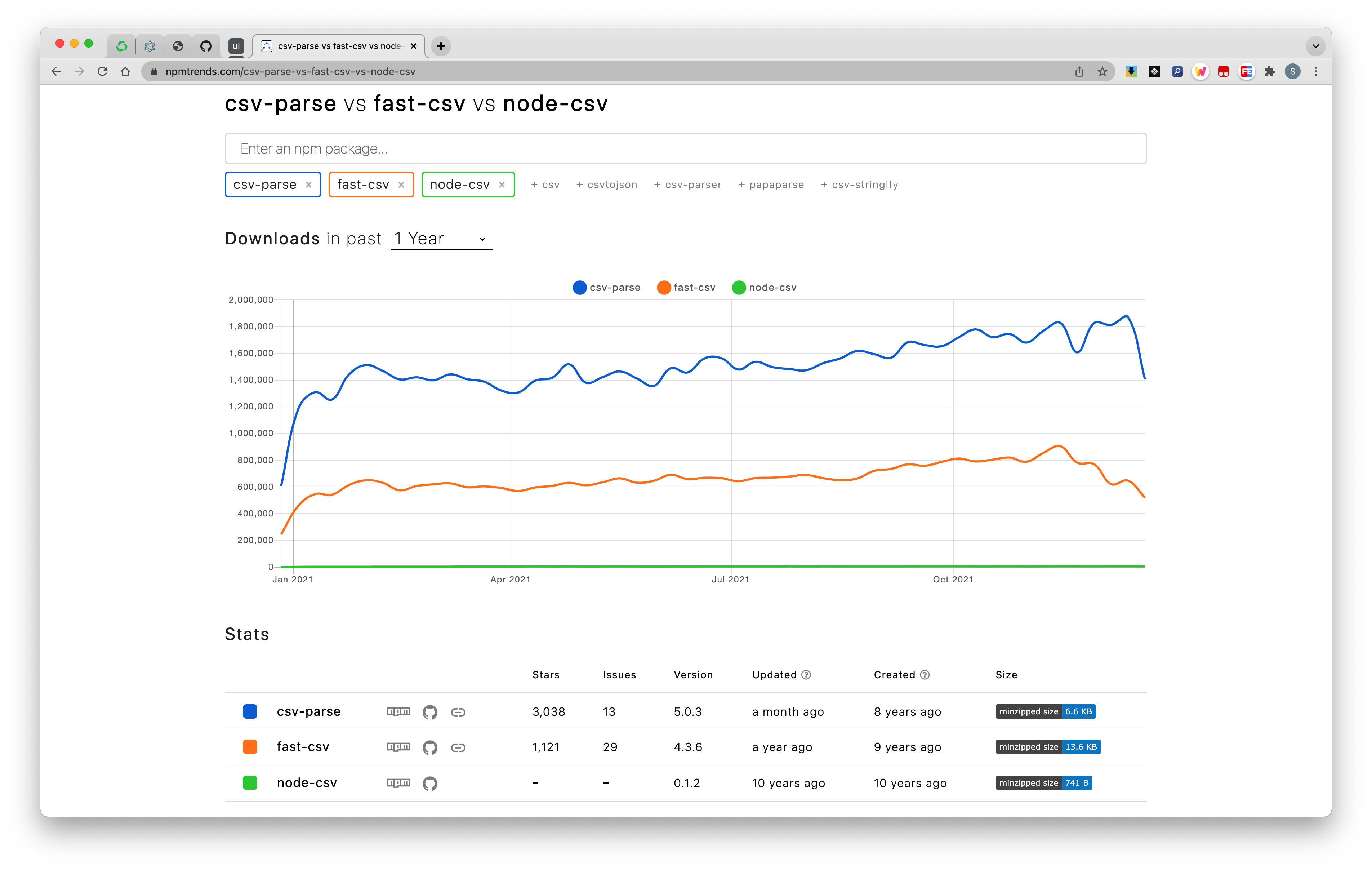

node-csv or fast-csv

First, I chose csv since its star is more than fast-csv's.

However, I soon happened to find it's too raw to chinese file parse, since the csv can't handle the gbk problem, at least, not automatically.

And the behind reason is that the fs.createReadStream doesn't support our gbk format.

As a contrast, the fast-csv helped me a lot.

If I just use fast-csv to read gbk file, then the default garbled(乱码) would be shown(but not error), which would output correctly if we added the pipe of iconv conversion.

import * as csv from '@fast-csv/parse';

import * as fs from 'fs';

import iconv from 'iconv-lite';

fs.createReadStream('./samples/erp_md.csv')

.pipe(iconv.decodeStream('gbk'))

.pipe(iconv.encodeStream('utf-8'))

.pipe(csv.parse({headers: true}))

.on('error', (error) => console.error(error))

.on('data', (row) => console.log(row))

.on('end', (rowCount: number) => console.log(`Parsed ${rowCount} rows`));

In conclusion, maybe I don't know whether the speed when handling data of fast-csv is faster than the node-csv, but it does free me a lot from annoying encoding problems, which is also fast!

I then would not hesitate to choose fast-csv.

ref:

iconv解码gbk必备!

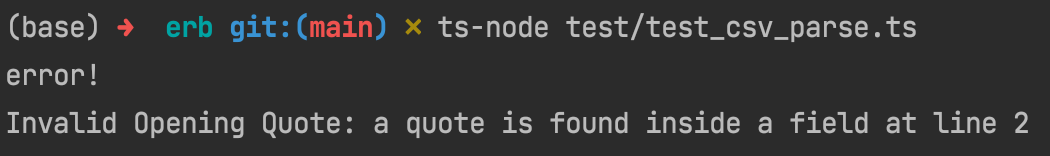

fast-csv problem of quotes

ref:

csv not closed

I did test, and to find that the stream is destroyed but not closed, since read by block but parse by row.

So the better way is to detect header first.

run js in different envs

browser env

just open the chrome, and the console of it.

node env

node .

node + esm

node --experimental-modules .

And then, in the console, use dynamic import like this: var s; import("XXX").then(r => s.default).

!!!warning javascript doesn't support esm

ref:

node + esm + ts

- install

ts-node

npm i -D ts-node

tsc --init

- modify

tsconfig.jsonfile

// tsconfig.json

{

"module": "esnext" /* Specify what module code is generated. */,

"modulesolution": "node" /* Specify how TypeScript looks up a file from a given module specifier. */,

"esModuleInterop": true /* Emit additional JavaScript to ease support for importing CommonJS modules. This enables `allowSyntheticDefaultImports` for type compatibility. */

}

- run

node --loader ts-node/esm FILEinstead ofts-node FILE

ref:

use __filename and __dirname in ESM

import {dirname} from 'path';

import {fileURLToPath} from 'url';

const __dirname = dirname(fileURLToPath(import.meta.url));

ref: